capito.ai

On-premises solutions for your needs

With an on-premises installation you can host capito digital on your own servers.

capito.ai

With an on-premises installation you can host capito digital on your own servers.

All capito.ai services are available as on-premises solutions. You have full access to capito digital.

Our CI/CD process is designed to make on-premises installation and software updates as easy as possible.

With an on-premises installation, you can choose where the software is hosted. You have full control over your data.

Simplification API

capito.ai simplifies texts fully automatically for 3 easy-to-understand language levels.

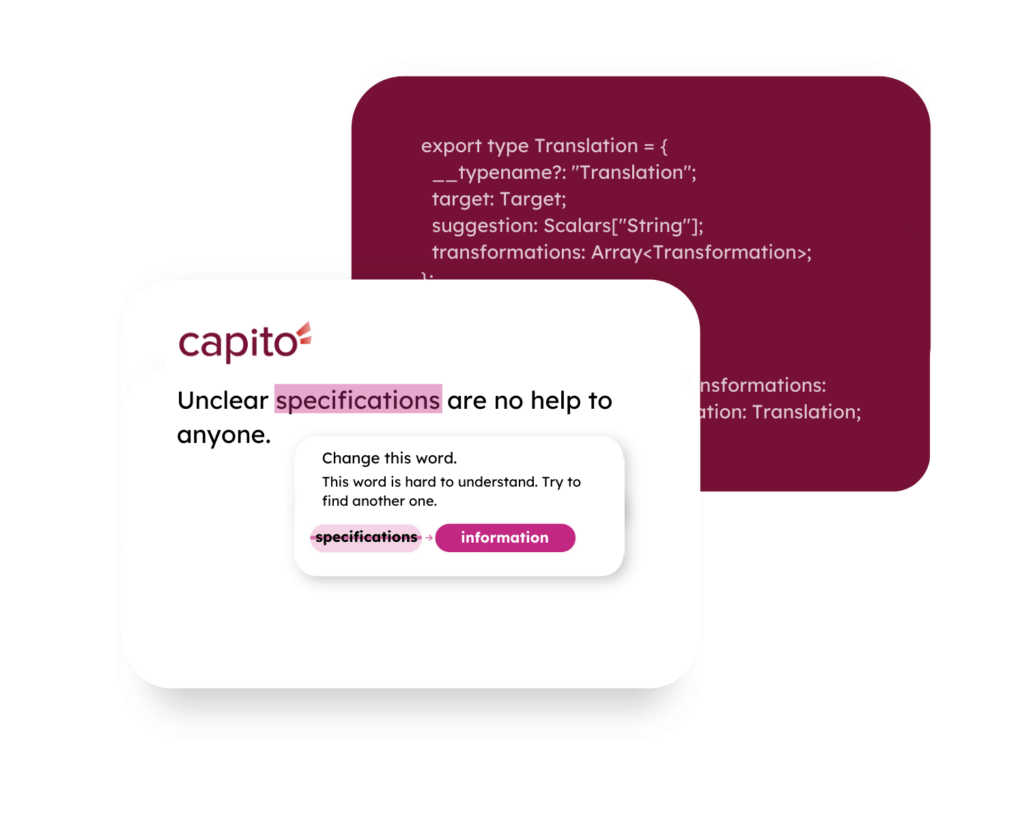

Analysis API

capito.ai checks texts for comprehensibility and shows which simplifications can be made.

Suggestions API

For difficult words, capito.ai suggests easy-to-understand alternatives.

Lexicon API

Easily understandable explanations of terms can be requested directly.

Any questions?

If you would like more information and answers to frequently asked questions about our on-premises solutions, please click here.

Don’t hesitate to reach out to us!

capito is Italian and means “I get it.”

In the future, everyone should be able to say: “I have understood”.

+ 43 316 393 449 office@capito.eu

Headquarters

Heinrichstraße 145

8010 Graz

Austria

U.S. division

1247 Wisconsin Avenue NW Suite 201

Washington, DC 20007

USA

capito is part of the atempo group